N( t R), n( t R, C), and n( t R, NC) are the total number of samples, ‘C’ samples and ‘NC’ samples at the right child node respectively.

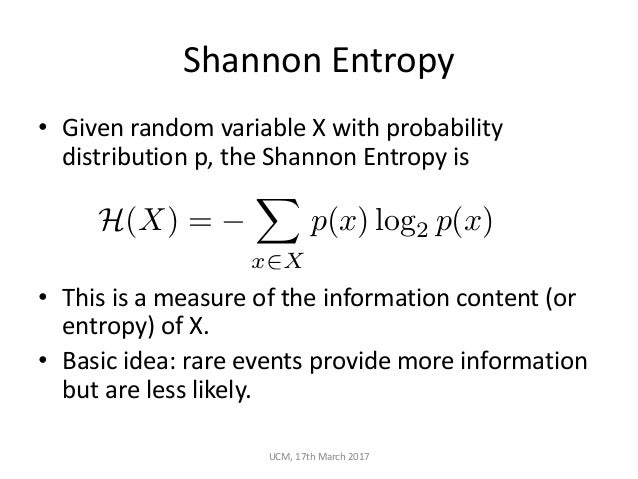

N( t L), n( t L, C), and n( t L, NC) are the total number of samples, ‘C’ samples and ‘NC’ samples at the left child node respectively, Probability of selecting a class ‘NC’ sample at the right child node, p NC,R = n( t R, NC) / n( t R), Probability of selecting a class ‘C’ sample at the right child node, p C,R = n( t R, C) / n( t R), for example let us consider following table. Probability of selecting a class ‘NC’ sample at the left child node, p NC,L = n( t L, NC) / n( t L), Probability of selecting a class ‘C’ sample at the left child node, p C,L = n( t L, C) / n( t L), Moving on, the entropy at left and right child nodes of the above decision tree is computed using the formulae: At a certain node, when the homogeneity of the constituents of the input occurs (as shown in the rightmost figure in the above Entropy Diagram), the dataset would no longer be good for learning. However, such a set of data is good for learning the attributes of the mutations used to split the node.

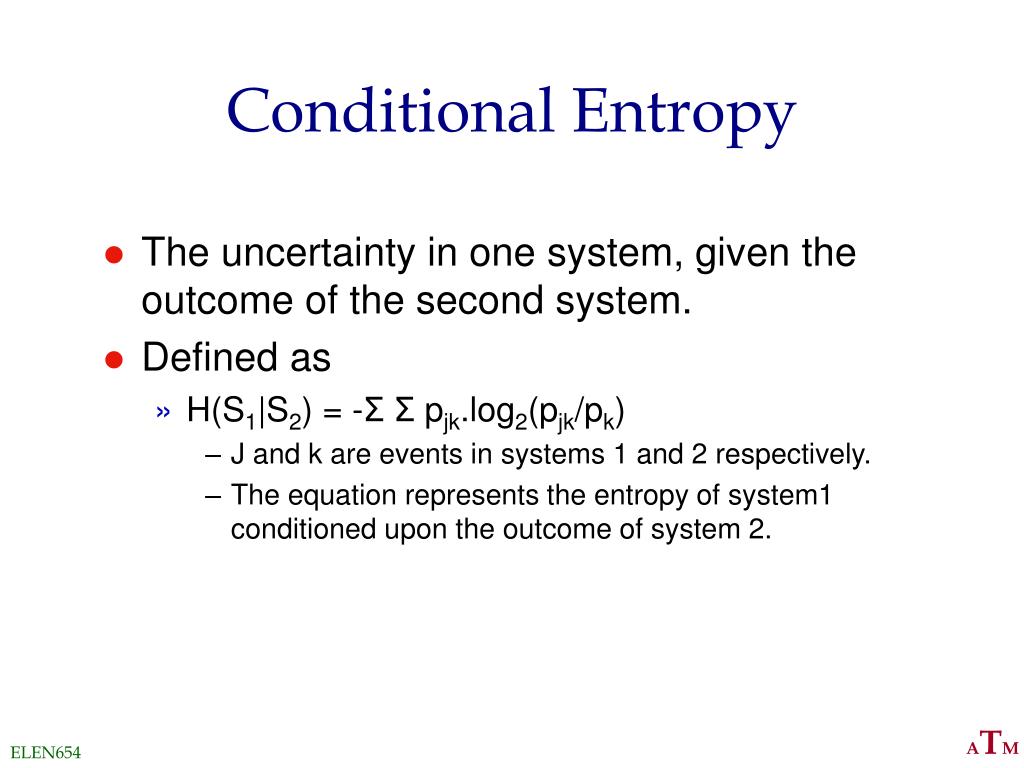

The information gain of a random variable X obtained from an observation of a random variable A taking value A = a (1 is the optimal value) suggests that the root node is highly impure and the constituents of the input at the root node would look like the leftmost figure in the above Entropy Diagram. However, in the context of decision trees, the term is sometimes used synonymously with mutual information, which is the conditional expected value of the Kullback–Leibler divergence of the univariate probability distribution of one variable from the conditional distribution of this variable given the other one. In information theory and machine learning, information gain is a synonym for Kullback–Leibler divergence the amount of information gained about a random variable or signal from observing another random variable. Conditional entropy of countable-to-one extensions.

0 kommentar(er)

0 kommentar(er)